Deepfake & Synthetic Media Detection

Next-Generation AI vs AI Technology for Synthetic Content Protection

The Growing Threat of Synthetic Media

The rapid advancement of artificial intelligence and machine learning technologies has democratized the creation of highly convincing synthetic media, fundamentally challenging our ability to distinguish between authentic and artificially generated content. Deepfake technology, once requiring extensive technical expertise and computational resources, is now accessible through consumer applications and cloud services, enabling anyone to create realistic but fabricated video content with minimal effort.

This technological revolution presents unprecedented challenges for digital trust, information integrity, and user safety. Synthetic media can be weaponized for non-consensual intimate content, political disinformation, financial fraud, celebrity impersonation, and social manipulation campaigns. The consequences extend far beyond individual harm to threaten democratic processes, financial systems, and social cohesion. Protecting against these threats requires sophisticated detection technology that can stay ahead of rapidly evolving generation techniques.

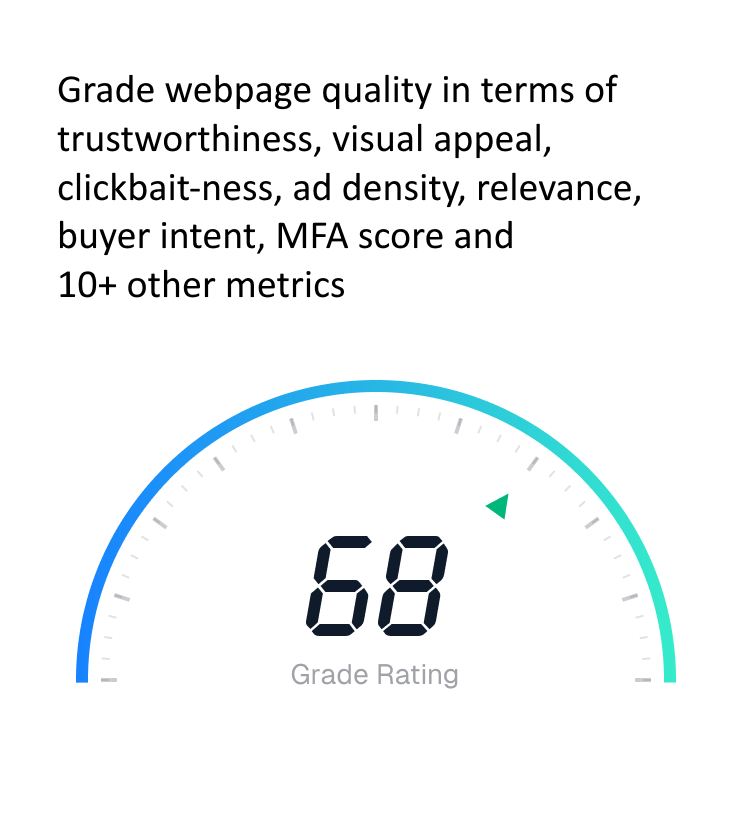

Synthetic Media Detection Performance

- 94.8% Detection Accuracy - Industry-leading synthetic content identification

- 12+ Generation Method Recognition - Comprehensive algorithm coverage

- Real-time Processing - Instant analysis for live content protection

- Multi-modal Analysis - Combined visual and audio synthetic detection

- Continuous Model Updates - Adaptive protection against new techniques

Understanding Deepfake Generation Technologies

Effective synthetic media detection requires comprehensive understanding of the technologies and techniques used to generate deepfakes and other forms of synthetic content. Our detection system is built upon detailed knowledge of generative adversarial networks (GANs), diffusion models, autoencoder architectures, and emerging synthesis techniques that enable the creation of increasingly sophisticated fake content.

Generative Adversarial Network Analysis

GANs represent the foundation of most current deepfake generation technology, employing competing neural networks to create increasingly realistic synthetic content. Our detection system understands the specific artifacts and patterns produced by different GAN architectures, including StyleGAN, Progressive GAN, CycleGAN, and specialized face-swapping variants like FaceSwap and DeepFaceLab.

Each GAN architecture produces characteristic artifacts in the generated content - subtle inconsistencies in pixel patterns, frequency domain signatures, and temporal behaviors that our detection algorithms can identify. By maintaining comprehensive knowledge of these generation-specific patterns, our system can not only detect synthetic content but also identify the likely generation method used.

Diffusion Model Detection

Emerging diffusion-based generation models represent a new frontier in synthetic media creation, often producing higher quality results with fewer detectable artifacts than traditional GAN approaches. Our detection system incorporates specialized analysis capabilities for diffusion-generated content, identifying the unique characteristics and subtle imperfections that distinguish diffusion-based synthetic content from authentic material.

Diffusion model detection requires different analytical approaches than GAN detection, focusing on noise patterns, reconstruction artifacts, and the specific ways diffusion models handle fine details and textures. Our system's multi-modal approach ensures comprehensive coverage of both traditional and emerging generation techniques.

Commercial Application Recognition

The proliferation of commercial deepfake applications and online services has created new categories of synthetic content with platform-specific characteristics. Our detection system includes specialized recognition capabilities for content generated by popular commercial applications, enabling identification of platform-specific artifacts and processing signatures.

Algorithm Fingerprinting

Identification of specific generation algorithms and their characteristic artifacts.

Quality Assessment

Analysis of generation quality and sophistication levels for risk evaluation.

Source Attribution

Identification of likely generation tools or platforms used for content creation.

Advanced Detection Methodologies

Our synthetic media detection system employs multiple complementary detection methodologies, each targeting different aspects of synthetic content generation. This multi-pronged approach ensures comprehensive detection coverage and maintains effectiveness even as generation techniques evolve and improve.

Temporal Inconsistency Analysis

One of the most reliable indicators of synthetic video content lies in temporal inconsistencies that arise from the frame-by-frame generation process used by most deepfake systems. Authentic video captures natural physiological movements, subtle expression changes, and consistent lighting that are difficult for current generation algorithms to replicate perfectly across extended sequences.

Our temporal analysis examines frame-to-frame consistency in facial landmarks, micro-expressions, eye movement patterns, and physiological indicators like pulse visibility and natural breathing patterns. These biological markers are extremely difficult for synthetic generation systems to replicate convincingly, providing reliable detection signals even in high-quality deepfakes.

Frequency Domain Analysis

Synthetic content often exhibits characteristic patterns in frequency domain analysis that are not present in authentic content. Our frequency analysis examines spectral characteristics of video content, identifying anomalous frequency distributions that indicate artificial generation. This includes analysis of compression artifacts, upsampling patterns, and frequency domain signatures specific to different generation algorithms.

Advanced Fourier analysis and wavelet transforms reveal subtle patterns in how synthetic content distributes energy across different frequency bands, often showing regularities or anomalies that distinguish artificial content from natural video capture processes.

Biometric Authenticity Verification

Human biometric patterns provide another reliable foundation for synthetic media detection. Our biometric analysis examines subtle physiological indicators that are extremely difficult for current generation technology to replicate accurately, including natural blinking patterns, micro-saccadic eye movements, pulse visibility in facial video, and natural asymmetries in facial features.

These biometric indicators operate below the threshold of conscious perception but provide powerful signals for automated detection systems. The analysis is particularly effective because these physiological patterns are fundamental to human biology and cannot be easily replicated without comprehensive understanding of complex biological systems.

Physiological Pattern Analysis

Detection of natural human biological markers that are difficult to synthesize artificially.

Compression Artifact Examination

Analysis of video compression patterns that reveal synthetic generation processes.

Landmark Consistency Tracking

Monitoring facial and body landmark consistency across video sequences.

Audio-Visual Synchronization Analysis

Many deepfake creation processes focus primarily on visual generation while using original or separately generated audio, creating subtle but detectable mismatches between audio and visual elements. Our audio-visual synchronization analysis examines the precise relationship between spoken content and visual lip movements, facial expressions, and physiological indicators to identify synthetic content.

Lip-Sync Accuracy Assessment

Authentic speech exhibits precise synchronization between lip movements and spoken sounds, with specific timing relationships that are difficult for synthetic systems to replicate perfectly. Our lip-sync analysis examines phoneme-to-viseme matching, timing accuracy, and the natural variations that occur in authentic speech production.

The analysis extends beyond simple lip movement matching to include examination of tongue visibility, teeth positioning, jaw movement patterns, and the subtle facial muscle activations that accompany natural speech production. These comprehensive indicators provide robust detection capabilities even for sophisticated deepfakes.

Expression-Voice Correlation

Natural speech is accompanied by corresponding facial expressions, emotional indicators, and physiological changes that are synchronized with vocal characteristics. Our expression-voice correlation analysis examines whether visual emotional indicators align appropriately with audio emotional content, detecting mismatches that suggest synthetic generation.

This analysis includes examination of micro-expressions, emotional consistency, stress indicators, and the natural correlations between vocal characteristics and facial expression patterns that are difficult for current generation systems to replicate accurately.

Breathing and Speech Pattern Analysis

Natural speech production requires coordination between breathing patterns and vocal output, creating subtle but detectable physiological indicators. Our analysis examines whether visual breathing patterns align appropriately with speech rhythms and vocal stress patterns, identifying inconsistencies that suggest synthetic content.

Audio-Visual Detection Indicators

- Phoneme-Viseme Matching - Precise speech sound to lip movement correlation

- Emotional Consistency - Alignment between vocal and visual emotional indicators

- Physiological Coordination - Natural breathing and speech pattern relationships

- Micro-Expression Timing - Subtle facial expression synchronization with speech

- Voice-Face Identity Correlation - Consistency between speaker identity and facial features

Emerging Threat Detection

The rapidly evolving landscape of synthetic media generation requires detection systems that can adapt to new techniques and emerging threats. Our detection system incorporates advanced machine learning capabilities that enable continuous adaptation to new generation methods, emerging commercial applications, and sophisticated evasion techniques.

Few-Shot Learning Adaptation

When new generation techniques emerge, our detection system can rapidly adapt using few-shot learning approaches that require minimal training data to recognize new synthetic content patterns. This adaptive capability ensures that protection remains effective even as generation technology continues to evolve rapidly.

The few-shot learning system analyzes small samples of new synthetic content types to identify characteristic patterns and artifacts, enabling rapid deployment of updated detection capabilities without requiring extensive retraining or system downtime.

Adversarial Attack Resistance

Sophisticated attackers may attempt to fool detection systems using adversarial techniques that specifically target known detection algorithms. Our system incorporates adversarial training and robust detection methodologies that maintain effectiveness even when facing targeted evasion attempts.

Adversarial resistance includes techniques such as ensemble detection methods, randomized analysis approaches, and detection algorithms that are inherently resistant to adversarial manipulation, ensuring reliable protection even against sophisticated attacks.

Zero-Day Synthetic Content Protection

Our detection system includes generic synthetic content indicators that can identify previously unknown generation techniques based on fundamental characteristics of artificial content generation. These zero-day protection capabilities provide defense against novel synthetic content even before specific detection algorithms have been developed.

Adaptive Learning Systems

Continuous adaptation to new generation techniques and emerging synthetic media types.

Evasion Detection

Recognition of attempts to specifically bypass or fool detection algorithms.

Novel Technique Identification

Detection of previously unknown generation methods through pattern analysis.

Real-World Application and Implementation

Implementing effective deepfake detection in real-world scenarios requires consideration of computational constraints, processing speed requirements, and integration with existing content moderation workflows. Our detection system has been optimized for practical deployment across various platform types and use cases.

Real-Time Detection Capabilities

Live streaming and real-time communication platforms require deepfake detection that operates with minimal latency while maintaining high accuracy. Our optimized detection algorithms can analyze video content in real-time, enabling immediate protection against synthetic content in live contexts.

Real-time detection employs lightweight analysis methods that focus on the most reliable indicators while maintaining processing speeds compatible with live content delivery requirements.

Batch Processing for Content Libraries

Large content libraries and video archives require efficient batch processing capabilities that can analyze vast quantities of content while providing detailed analysis reports. Our batch processing system supports parallel analysis of multiple video streams with comprehensive reporting and detailed confidence scoring.

Integration with Content Workflows

Effective deepfake detection must integrate seamlessly with existing content moderation workflows, providing clear indicators of synthetic content probability along with detailed analysis reports that help human moderators make informed decisions about detected content.

Ethical Considerations and Responsible Deployment

Deepfake detection technology raises important ethical considerations around privacy, false accusations, and the potential for misuse. Our detection system incorporates responsible AI principles that balance effective protection against synthetic media threats with respect for user privacy and content creator rights.

Privacy-Preserving Analysis

Our detection system analyzes content characteristics without storing or retaining personal information, ensuring that biometric analysis and identity verification processes respect user privacy while providing effective synthetic content detection.

Transparency and Explainability

Detection decisions include detailed explanations of the analysis methods and indicators that led to synthetic content identification, enabling transparent review of detection decisions and supporting appeals processes for false positive cases.

Future Developments and Research

The arms race between synthetic media generation and detection continues to drive innovation in both offensive and defensive technologies. Our ongoing research focuses on next-generation detection methods, improved robustness against evasion attempts, and adaptation to emerging generation technologies including 3D deepfakes, real-time generation, and multimodal synthetic content.

Collaboration with leading research institutions and participation in academic conferences ensures that our detection capabilities remain at the forefront of technological development while contributing to the broader scientific understanding of synthetic media detection challenges.

Conclusion

Deepfake and synthetic media detection represents a critical frontier in digital content safety, requiring sophisticated technology, continuous adaptation, and careful consideration of ethical implications. Our comprehensive detection system provides the advanced capabilities necessary to identify synthetic content across various generation methods while respecting user privacy and content creator rights.

For platforms serious about protecting users from synthetic media threats, implementing advanced deepfake detection provides essential protection against one of the most sophisticated and evolving threats in the digital content landscape.